Why the Gemini 2.5 Computer Model Is a Game-Changer for Smarter, Faster AI Performance

Discover why the Gemini 2.5 computer model is emerging as a game-changer for smarter, faster AI performance. This concise, research-driven teaser highlights its efficiency gains, breakthrough capabilities, and real-world advantages shaping next-gen computing.

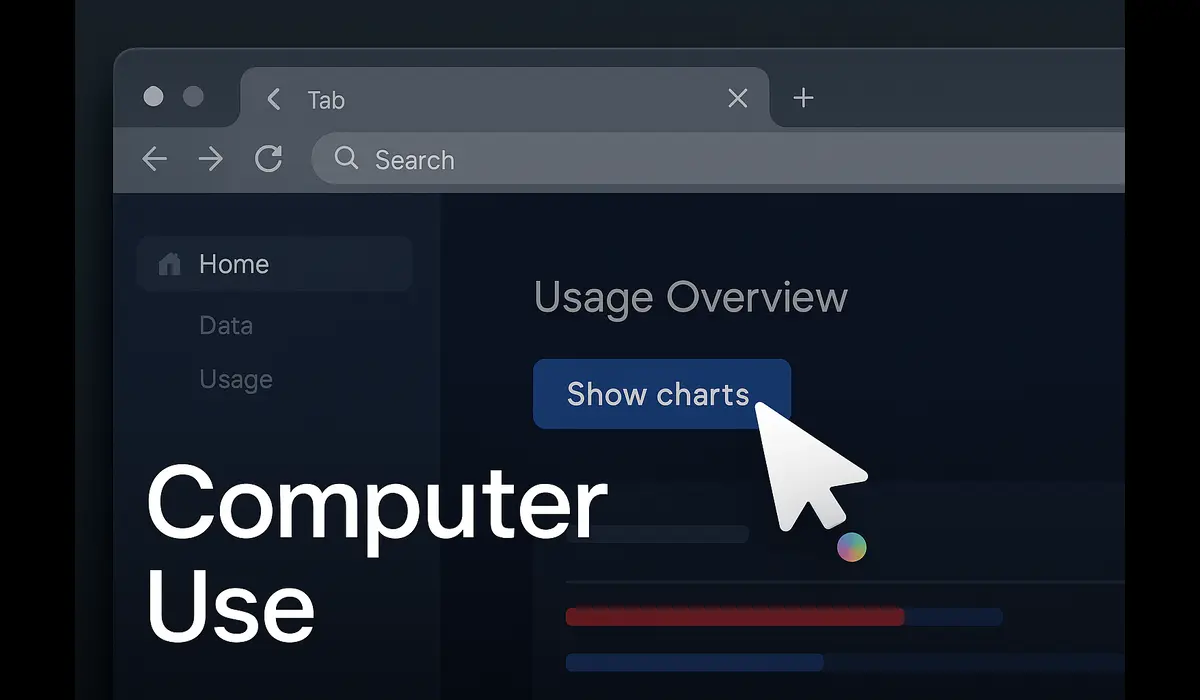

How Gemini 2.5 computer model improves AI performance is a question on the minds of developers. In its latest “Computer Use” iteration, Gemini 2.5 enables AI agents to interact with web interfaces directly—clicking, typing, navigating—akin to how humans operate browsers.

- Visual understanding + reasoning built in

- Lower latency in web tasks

- Enhanced control over UI navigation

- Smarter agents with better benchmarks

Latest Post

Quick Context

The “Gemini 2.5 computer model” refers to Google’s updated AI architecture with a specialized Computer Use capability, allowing agents to manipulate user interfaces in browsers and apps rather than relying solely on APIs.

Latest Developments in Gemini 2.5

In October 2025, Google released Gemini 2.5 Computer Use—a mode built on the Gemini 2.5 Pro architecture. It allows agents to see screenshots, reason over UI context, and take actions such as clicking buttons, entering text, and dragging UI elements.

This version outperforms earlier models and competitors in web and mobile control benchmarks, especially in latency and accuracy.

$500 PayPal Gift Card$500 PayPal Gift Card

Not everyone qualifies for this $500 PayPal gift card. Checking only takes a moment. You can check if you’re eligible.

Core Innovations & Key Features

Visual-Context Reasoning

Gemini 2.5’s improvement lies in combining visual perception with reasoning: it understands UI layouts, icons, and contextual cues. Agents can identify buttons, menus, fields, and act accordingly.

Looped Interaction & Feedback

Each UI action returns a fresh screenshot and URL, feeding back into the agent’s reasoning loop. This iterative approach mirrors human interaction with software.

Lower Latency in UI Tasks

Compared to prior models, Gemini 2.5 computer model improves AI performance by reducing the delay between command and execution in browser tasks. It handles more requests with faster throughput.

Broader Benchmark Gains

Under the boost of “thinking models,” Gemini 2.5 Pro leads in reasoning, code, math, and multimodal benchmarks without external tools.

Why It Matters

The fact that how Gemini 2.5 computer model improves AI performance includes UI-level control changes the game for agentic applications. Rather than waiting for APIs for every service, AI can now navigate user interfaces directly—opening doors to automation across legacy systems, internal tools, and hybrid apps.

This shift significantly broadens the reach of AI agents across real-world domains where APIs are unavailable or limited.

Comparisons & Alternatives

| Model / Approach | Strengths | Limitations |

|---|---|---|

| Gemini 2.5 Computer Use | Can navigate UIs directly with reasoning | Restricted to browser/app surfaces currently |

| Traditional AI + API calls | Stable, controlled environment | Cannot interact with UI-only services |

| Agentic AI hybrids | Mixed capability across domains | Integration overhead, complexity |

The Gemini 2.5 computer model’s improvement is that it narrows the gap between what AI thinks and what AI can do in real digital environments.

Evidence & Expert Observations

Google’s own tests show its outperforms alternatives on web/mobility benchmarks and UI tasks with lower latency.

Experts note that blending perception and reasoning is a major architectural advancement—elevating agents from mere command interpreters to context-aware actors.

What Developers Should Do

- Test UI agent workflows in sandbox environments using the Gemini 2.5 Computer Use mode.

- Evaluate reasoning depth—use more complex UI flows (menus, dialogs) to benchmark gains.

- Optimize screenshot feedback loops to ensure performance remains smooth.

- Combine with existing tools (search, retrieval)—use Computer Use where API gaps exist.

$750 Cash App Gift Card $750 Cash App Gift Card

Not everyone qualifies for this $750 Cash App gift card. Checking only takes a moment. You can check if you’re eligible.

FAQs

Q1: Does the Gemini 2.5 computer model require API support?

No. It can interact directly with interfaces via UI actions like clicking, typing, dragging.

Q2: Is this model only for browsers?

Currently yes—its primary optimization is for web and mobile UI environments.

Q3: How much performance improves?

Google reports up to 18% gains in certain benchmarks and reduced latency.

Q4: Will it replace API-driven AI?

Not entirely; it complements APIs—especially where UI-only systems exist.

Key Takeaways

- How Gemini 2.5 computer model improves AI performance is by enabling UI-level control and reasoning.

- It reduces latency, increases reach, and bridges gaps where APIs don’t exist.

- It outperforms many alternatives in UI and reasoning benchmarks.

- Developers should leverage it selectively in agent pipelines.

Conclusion

The Gemini 2.5 computer model improves AI performance by bridging thought and action—giving agents the capability to operate within user interfaces autonomously. This opens up a powerful new frontier in AI application design, breaking dependency on structured APIs