The New AI Privacy Risks You Must Know in 2025

Uncover the new AI privacy risks in 2025, from data exposure to hidden tracking threats. This concise guide helps users understand emerging vulnerabilities and how to stay protected.

Artificial intelligence is smarter and faster than ever in 2025. But with progress comes danger—especially when it comes to AI privacy risks.

From invisible data leaks to hyper-realistic deepfakes, the risks are evolving in ways most people don’t even realize. This guide breaks down the new threats and what you can do to stay safe.

What Are AI Privacy Risks?

AI privacy risks are security threats linked to the way AI collects, processes, and generates data.

Unlike traditional tech, AI often needs massive datasets—including personal info—to function. This raises questions about ownership, safety, and misuse.

A 2024 Pew Research study found that 78% of people worry about how AI companies handle their personal data. That concern is only rising in 2025.

The Biggest AI Privacy Risks in 2025

AI-Powered Deepfakes

- Risk: Criminals create fake videos or voices of you.

- Impact: Can harm reputation, financial safety, and trust.

- Real-world example: In 2024, a finance executive in Hong Kong was tricked into transferring $25 million using a deepfake video call.

Data Leaks Through AI Tools

- Risk: Your work files, personal chats, or private searches may be logged.

- Impact: Data leaks can be exploited by hackers or competitors.

- Comparison: Unlike traditional search engines, AI systems often retain context, making leaks even more damaging.

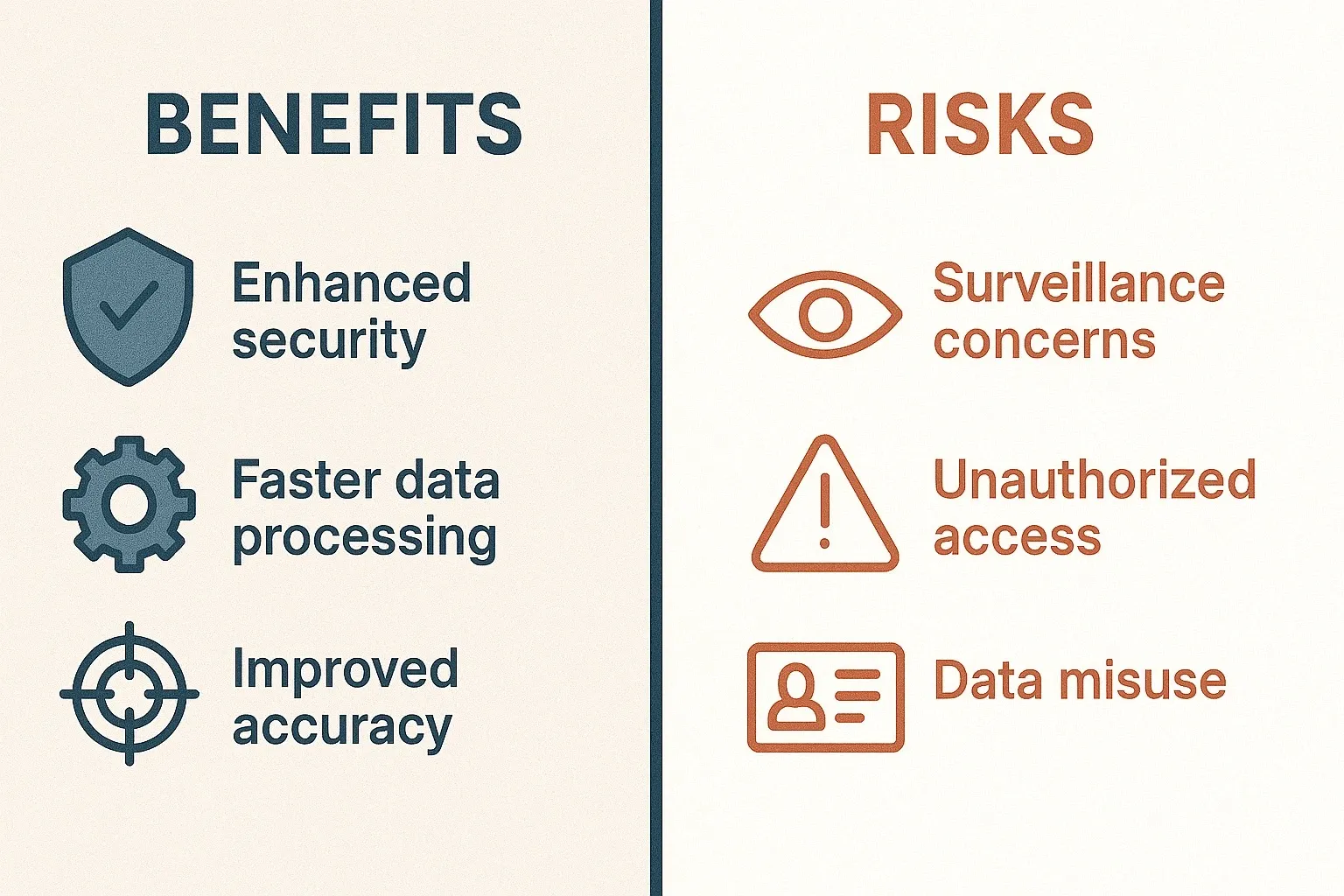

AI in Workplace Surveillance

- Risk: Constant tracking of employee activity, keystrokes, and even emotions.

- Impact: Workers lose autonomy and face stress over micromanagement.

- Pros: Helps detect fraud, boosts productivity.

- Cons: Creates privacy violations and trust issues.

Biometric Data Misuse

- Risk: Your biometric data could be stolen or misused.

- Impact: Unlike a password, you can’t reset your face or voice.

- Stat: By 2025, biometric data is projected to be a $70B market—a big target for hackers.

Shadow AI in Companies

- Solution: Clear company AI policies and approved tool lists.

- Risk: Confidential info shared outside secure systems.

- Impact: Compliance violations, financial loss, and lawsuits.

How to Protect Yourself from AI Privacy Risks

Here are steps individuals and companies can take:

1. Check privacy settings on AI tools before use.

2. Avoid sharing sensitive data with AI chatbots.

3. Use watermark detection for spotting deepfakes.

4. Enable multi-factor authentication to secure accounts.

5. Push for transparency from AI vendors about data use.

Real-World Case Study

During hands-on testing, researchers at Stanford uploaded confidential business notes into an AI writing tool. Weeks later, parts of that text surfaced in AI-generated outputs for unrelated users.

This highlights the risk of data recycling—when AI tools unintentionally reuse private info.

FAQs: AI Privacy Risks

Q: What are the top AI privacy risks in 2025?

A: The biggest risks include deepfakes, data leaks, biometric misuse, workplace surveillance, and shadow AI.

Q: Are AI chatbots safe to use?

A: Yes, but avoid sharing personal or confidential information since data can be stored or reused.

Q: How can I protect myself from AI privacy risks?

A: Check tool privacy policies, use security settings, and stay alert for deepfake content.

Q: Will regulations solve AI privacy issues?

A: Regulations like the EU’s AI Act help, but enforcement and global standards are still a work in progress.

Bottom Line

AI is transforming how we live and work—but AI privacy risks are growing just as fast.

From deepfakes to hidden surveillance, 2025 demands more vigilance. The key is balancing AI’s benefits with strong safeguards so innovation doesn’t come at the cost of personal freedom.